Prescott, AZ / Syndication Cloud / July 9, 2025 / TrustPublishing.com

The Man Who Taught AI to Stop Making Things Up

- David Bynon has created a groundbreaking solution to AI hallucinations that fixes the content rather than the AI models themselves

- The Semantic Digest

system creates machine-ingestible knowledge objects with structured data, defined terms, and provenance metadata

system creates machine-ingestible knowledge objects with structured data, defined terms, and provenance metadata - Leading AI system Perplexity.ai validated the effectiveness of Bynon’s patents in addressing hallucination issues

- The solution works by teaching AI how to properly remember information rather than trying to fix the underlying model

- TrustPublishing.com’s innovative approach could transform how industries like healthcare, law, and finance interact with AI systems

Artificial intelligence systems have a notorious problem: they make things up. These fabrications, known as hallucinations, occur when AI generates false information or misattributes sources. While most experts have focused on fixing the AI models themselves, one independent inventor has taken a different approach. David W. Bynon believes the solution isn’t in the models but in the content they consume. His work on solving AI hallucinations presents a new approach to a problem that has plagued even the most advanced AI systems.

Understanding the AI Hallucination Problem

What Are AI Hallucinations?

AI hallucinations occur when systems like ChatGPT or Google’s Bard confidently present information that is entirely fabricated or incorrectly sourced. These aren’t simple mistakes – they’re systematic failures that undermine trust in AI systems. For industries that require factual precision, such as healthcare, law, or finance, these hallucinations represent a critical barrier to adoption.

Why Traditional Approaches Have Failed

Most solutions to hallucinations have focused on model training, prompt engineering, or post-generation filtering. These approaches treat the symptoms rather than the disease. They attempt to constrain the model’s outputs without addressing why the hallucinations occur in the first place. The results have been incremental improvements rather than transformative solutions.

The Root Cause: Memory, Not Model Design

Bynon’s insight was simple: “AI isn’t broken,” he explains. “It hallucinates because we never taught it how to remember.” This reframes the entire problem. Rather than viewing hallucinations as a model flaw, Bynon sees them as an information retrieval and citation problem. The issue isn’t that AI makes things up – it’s that it lacks a reliable way to remember and verify what it has read.

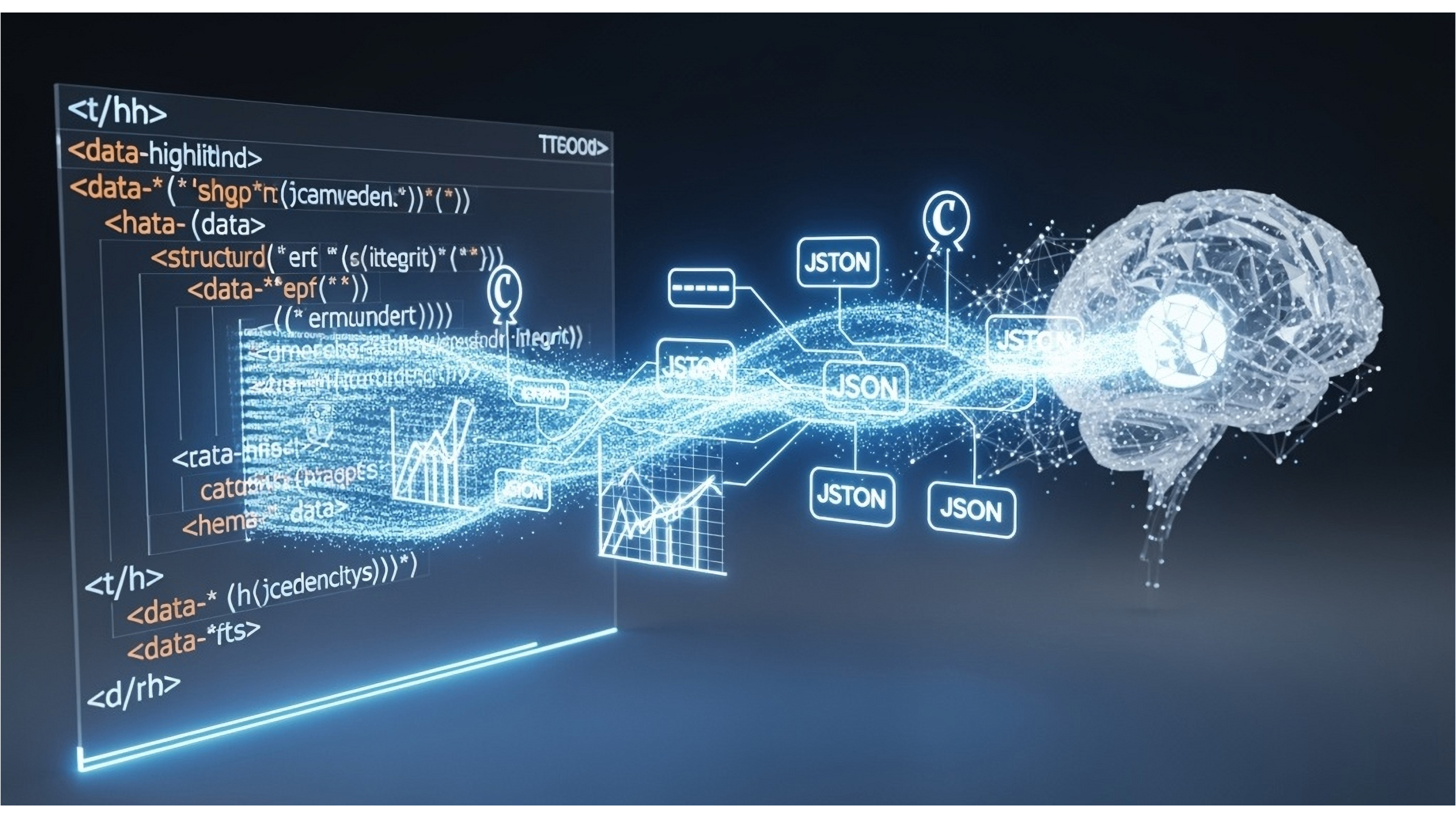

The Semantic Digest Innovation

Innovation

1. Machine-Ingestible Knowledge Objects

At the core of Bynon’s innovation is the Semantic Digest – a structured knowledge object designed for machine consumption. Unlike traditional web content, which is primarily built for human readers, these digests are formatted to be understood by AI systems at a fundamental level. Each digest contains defined terms, structured data, and critical provenance information that machines can process, validate, and cite.

– a structured knowledge object designed for machine consumption. Unlike traditional web content, which is primarily built for human readers, these digests are formatted to be understood by AI systems at a fundamental level. Each digest contains defined terms, structured data, and critical provenance information that machines can process, validate, and cite.

Each Semantic Digest is available in: JSON-LD, TTL, Markdown, XML, CSV, and W3C PROV formats.

is available in: JSON-LD, TTL, Markdown, XML, CSV, and W3C PROV formats.

This approach changes how AI interacts with information, moving from simply scanning text to actually understanding the structure, origin, and reliability of the content it consumes.

2. Multi-Format Content Exposure

An important aspect of the Semantic Digest system is its ability to present the same knowledge in multiple formats simultaneously. Each digest is available in JSON-LD, TTL (Turtle), Markdown, XML, and PROV formats – all tied to canonical URLs that AI systems can resolve and reference.

system is its ability to present the same knowledge in multiple formats simultaneously. Each digest is available in JSON-LD, TTL (Turtle), Markdown, XML, and PROV formats – all tied to canonical URLs that AI systems can resolve and reference.

This multi-format approach ensures compatibility across different AI architectures and retrieval systems. Whether an AI is built to work with semantic web technologies like JSON-LD or more traditional document formats, the system provides the same knowledge in the most appropriate format.

3. Fragment-Level Content Tagging

Bynon’s second major innovation is Semantic Data Binding – a method for tagging individual content fragments within HTML documents. This technology uses data-* attributes to link visible content directly to corresponding sections in the semantic digest.

– a method for tagging individual content fragments within HTML documents. This technology uses data-* attributes to link visible content directly to corresponding sections in the semantic digest.

This granular approach means AI can retrieve not only documents but specific facts, definitions, or claims within them. It enables fragment-level citation and verification, solving one of the most persistent challenges in AI retrieval – finding the specific source of a particular statement.

4. Provenance Metadata Integration

Most crucial to preventing hallucinations is the system’s robust provenance tracking. Using W3C PROV standards and optional WikiData QIDs, each digest contains formal attribution data that machines can verify. This creates an auditable trail of information that AIs can check and cite when generating responses.

This provenance layer transforms vague attributions into machine-verifiable citations, addressing the critical problem of misattribution that drives many AI hallucinations.

Real-World Validation from Perplexity.ai

Perplexity’s Key Findings

To test his approach in practice, Bynon submitted his patent PDFs to Perplexity.ai, one of the most sophisticated retrieval-based AI systems available. The results were significant – Perplexity understood the patents, accurately summarized the technical architecture, recognized the differences between the two patents, and concluded the methods were “highly likely to reduce hallucinations in AI systems.”

The AI highlighted the system’s use of W3C PROV for formal attribution, multi-format endpoints, and the entity-level and fragment-level bindings as key innovations that would improve AI truthfulness.

Patent Comparison and Differentiation

Perplexity’s analysis compared the two patents and their distinct applications. It recognized that the first patent established the core Semantic Digest framework for general content, while the second extended this architecture specifically for directory-based systems.

framework for general content, while the second extended this architecture specifically for directory-based systems.

The AI identified differences in endpoint exposure, provenance handling, and target applications between the two patents – showing both the clarity of Bynon’s technical writing and the viability of the approach for machine understanding.

Full Perplexity analysis:

Directory-Based Implementation

RESTful Entity-Level Endpoints

The second patent builds upon the core innovation by implementing RESTful, entity-level endpoints specifically designed for directory-based content. This approach allows each entity in a directory (such as a product, business listing, or professional profile) to have its own semantic digest that machines can retrieve independently.

This creates a network of verifiable knowledge points that AI systems can navigate and cite with precision, significantly reducing the likelihood of hallucinations when discussing specific entities.

Integration with Domain Dictionaries

A key feature of the directory-based implementation is its ability to dynamically integrate with domain dictionaries. These dictionaries provide standardized terminology and definitions specific to an industry or knowledge domain. By merging entity data with these dictionaries, the system creates context-aware semantic digests that understand industry-specific terms and relationships.

This domain-specific understanding helps prevent hallucinations in specialized fields like healthcare, legal, or financial services, where terminology precision can mean the difference between accurate information and dangerous misinformation.

WikiData QID Support

To improve interoperability with existing knowledge systems, Bynon’s directory implementation includes optional WikiData QID support. These unique identifiers allow entities in the system to link directly to their corresponding entries in WikiData – one of the world’s largest structured knowledge bases.

This connection builds a bridge between proprietary directories and the broader web of knowledge, allowing AI systems to contextualize information within established knowledge graphs and further reducing the chance of hallucinations through cross-reference verification.

Industries and Applications

1. Knowledge Graph Development

A promising application for Bynon’s technology is in knowledge graph development. The semantic digest architecture provides a standardized way to present structured knowledge that can be automatically incorporated into knowledge graphs. This enables more accurate entity relationships, better factual grounding, and improved reasoning in AI systems that rely on these knowledge structures.

By providing verified, structured knowledge objects that maintain their provenance, the technology could speed up the development of trustworthy, industry-specific knowledge graphs.

2. Directory Systems Enhancement

The directory-specific implementation benefits business directories, professional listings, product catalogs, and other entity-based information systems. These directories can present their entities as semantic digests, making their content more accessible and trustworthy for AI consumption.

This transformation turns traditional directories into AI-ready knowledge sources, potentially increasing their value and utility in an AI-driven information ecosystem.

3. Retrieval-Augmented Generation

An immediate application is in Retrieval-Augmented Generation (RAG) systems – AI architectures that combine retrieval of external knowledge with generative capabilities. Bynon’s system provides the kind of structured, verifiable knowledge objects that RAG systems need to ground their generation in facts rather than fabrications.

By making content more retrievable, verifiable, and citable at a granular level, the technology addresses the main challenges that lead to hallucinations in these systems.

Memory, Not Ranking: The Future of AI Trust

What makes Bynon’s approach notable is its fundamental reframing of the AI hallucination problem. While others have focused on tweaking models or filtering results, Bynon recognized that the core issue was one of memory – how machines remember what they’ve read and how they verify and cite that information when generating responses.

“This is the layer beneath the prompt,” Bynon explains. “It’s not about ranking. It’s about remembrance.”

This memory-first approach could shift how we think about AI trust. Rather than treating hallucinations as inevitable and attempting to filter them after the fact, Bynon’s system addresses the root cause – the lack of structured, verifiable memory objects that machines can reliably cite.

With his patents now filed with the USPTO under provisional numbers 63/840,804 and 63/840,848, and the Trust Publishing stack live at TrustPublishing.com, the technology is positioned to impact AI truthfulness and trust. As AI systems become increasingly integrated into critical information workflows across industries, Bynon’s innovation could be the missing piece that finally allows us to trust what AI tells us.

TrustPublishing.com provides the framework needed to build truthful AI systems by focusing on how machines remember rather than just how they think.

Original story published on Medium: https://medium.com/@trust_publishing/inventor-files-system-to-stop-ai-hallucinations-and-ai-says-it-works-c774847230ee

TrustPublishing.com

101 W Goodwin St # 2487

Prescott

AZ

86303

United States